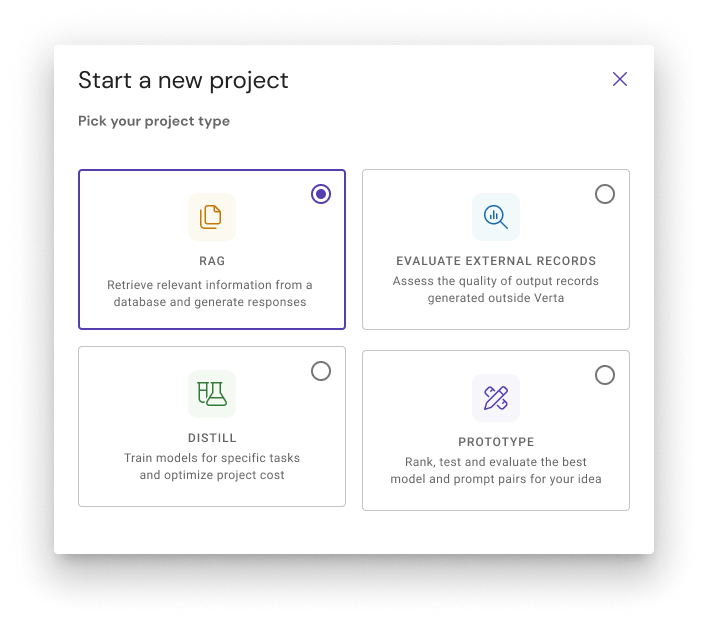

Retrieval-Augmented-Generation

RAGs to Riches in minutes

Combine deep learning with dynamic information retrieval for up-to-date, relevant and accurate LLM model outputs. AKA ask an LLM about anything you show it.

It's not literal magic but it feels like it.

For experts...

Streamlined path to trustworthy RAG

Do the least and get the most with the fastest RAG setup in the industry.

Go from dataset to custom RAG prototype in 5 minutes, no code needed. Embedded RLHF and automated evaluation means you benchmark as you build and ship faster than ever before.

- No code means no environment nightmares. Configure, test and deploy with no headaches. At integration time get a dummy-proof endpoint.

- No model training (time or money) needed

- Configure and tinker with vectorDBs, embedding models, chunking - or don't worry about it

- Easy maintenance and scaling - give your SMEs access to the UI to update the knowledge base or label benchmark datasets

- Every output comes with automatic evolution and hallucination detection

and everyone else

Go beyond foundational models

Generate AI answers from your trusted and private data sources. Combine the power of pre-trained LLMs and your data. Get it working in 5 minutes with Verta.

Get the most timely, relevant and important results by using retrieval-augmented-generation. When users ask a question an LLM answers based on your knowledge base - your proprietary expertise and data. Build an AI powered solution that is a sup to date and nuanced as your business and data.

- Leverage this industry-defining technique with no ML expertise. Embedding what? Chunking who?

- Trust every prediction - Answer questions come with embedded citations and a warning if hallucinations are suspected

- Easy create and maintain your knowledge base - your PDFs, spreadsheets, etc. Easily update any time via the UI

- Choose from leading foundational models to power your solution