About the Author

My name is Cory Johannsen, and I am an APM veteran with over 20 years of experience in software engineering. I have worked inside some of the largest data pipelines in the world, monitoring tens of millions of devices and applications and making the data accessible to the developers that maintained them. Now I work for Verta, an end-to-end ML operations platform providing model delivery, operations, and monitoring, leading monitoring development. When I started at Verta last year, I assumed model monitoring and APM were the same, but through my experience, I’ve identified some major differences. Here’s what I’ve learned.

What is APM?

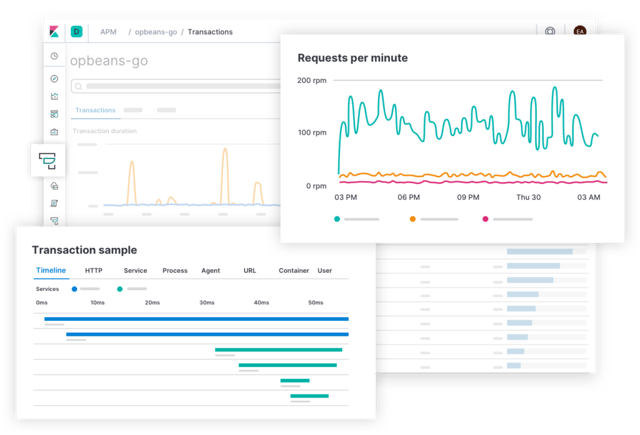

APM (Application Performance Monitoring) is a class of monitoring focused on the performance characteristics of production software systems. APM data consists of metrics, a combination of a name, a value, some labels, and a timestamp. APM systems are designed to measure and store vast quantities of metrics and to provide a simple method to get them back.

What is Model Monitoring?

Starting at Verta, I understood monitoring in terms of performance metrics. But as I learned more, I realized that the goal of model monitoring is to provide assurance that the results of applying a model are both consistent and reliable. You need to know when models are failing. Sounds simple - but failing has multiple meanings: a model can fail to operate, or it can operate smoothly but produce incorrect results, or it can be sporadic or unpredictable. When a model fails, you must quickly diagnose the root cause of the problem. Sounds easy enough, but knowing when a model fails is not necessarily a simple problem. Without a ground truth reference to measure against, failures can be difficult to detect. Determining whether a deviation has occurred involves complex statistical summaries collected over time. They could be metrics, distributions, binary histograms, or any other statistical measurement that is important to the model, and they are subject matter and model specific.

Model monitoring adds two very new and unique dimensions to the problem: data quality and data drift. We need to know that the model data itself is reliable, and we need to know how it is now changing over time.

What Makes ML Monitoring Unique

As I learned more about model monitoring, I understood that it is a superset of APM. The qualitative needs of model monitoring, however, mean that APM is no longer a sufficient solution. Models have long complex life cycles and monitoring needs to be integrated from the beginning to produce the traceability required for issue detection, uncovering why a deviation has occurred and how to remediate it. Monitoring data models and pipelines bring an entirely new set of concerns to the table.

Complex Metrics and Statistics

Information for model performance is not a simple comparison of two metric aggregates. It is a controlled comparison of statistics and distributions across time. To provide value, it must be repeatable, reliable, and consistent. If I run the same experiment one million times, the monitoring output must be consistent across runs, or the value of the comparison is lost. Meaningful deviations from the norm must be capable of driving alerts that are immediately actionable. This could be an automatic self-remediation that rolls back a canary or slack message that tells the owner what is wrong and connects them to the resources to address it as quickly as possible.

Customization

APM agents are designed to be “out of the box” functional. They know in advance how to get the data you are most likely to need - this is what makes them so powerful. However, model monitoring does not fit into that box. This is an important difference to understand: in model monitoring, only you (the model owner) have the necessary information to know what needs to be monitored. This is because every model and pipeline is different and highly specialized. You designed, built, trained, and operated these models - you are the expert, and only you know which metrics and distributions are valuable for measurement. Ultimately, only you know what the expected outcomes are for your models and how to determine correctness.

Domain expertise

In the world of APM, the comparison is predefined as part of the system. Evaluations come down to comparing two-time windows and calculating a delta between the metric values. In model monitoring, the comparison is not a singularly defined concept. It is inherent in the model development process and evolves as the model evolves and matures. I realized that in model monitoring, determining when a change is meaningful and significant is no longer a simple comparison between two predetermined values. Only the owner of a model knows the tolerances for deviation that are acceptable and how to determine when it is significant (or not!). Models and pipelines are living systems. They change with time, and only the owner of that system can know when the tolerances change and how that should affect overall performance. This all comes down to the most important aspect of any monitoring system: only the owner of a model or pipeline knows when it should alert and what actions should be taken. APM systems have a downside. They make it so easy to track and alert, you end up going overboard, and the pager starts buzzing, often resulting in alert fatigue. In model monitoring, only the model owner can make the determination of whether a condition should create an alert based on their knowledge of the model.

Summary

I am at Verta because I see MLOps customers struggling to access the tools they need to efficiently do their jobs, and I want to help solve that problem. I remember my experiences in high-severity incidents when my tools could mean the difference between an outage and a non-event. I believe that MLOps teams need to have access to tools that can provide the same capabilities for the new problem domain that has emerged.

Subscribe To Our Blog

Get the latest from Verta delivered directly to you email.

.png)